Difference between revisions of "VMware vSphere Hypervisor"

| Line 51: | Line 51: | ||

In order to test VMware on an NPG workstation I restored the original Windows Vista install from the Dell restore disk to [[benfranklin]], which now dual-boots Windows and Fedora 14. This means that only one machine is currently available to NPG members to manage systems on vSphere Hypervisors. | In order to test VMware on an NPG workstation I restored the original Windows Vista install from the Dell restore disk to [[benfranklin]], which now dual-boots Windows and Fedora 14. This means that only one machine is currently available to NPG members to manage systems on vSphere Hypervisors. | ||

| + | |||

| + | ====No Software RAID 1==== | ||

| + | |||

| + | vSphere Hypervisor does not offer any software-based [[RAID]] 1 support. This means that all hard drive mirroring needs to be done with the hardware RAID card (provided that it is supported by the hypervisor). There is, however, the ability to combine several disks into one large data volume, but this provides not redundancy for Virtual machine data. | ||

Revision as of 18:42, 17 May 2011

VMware vSphere Hypervisor is VMware's freely available bare metal hypervisor. It may offer a potential replacement for our existing virtualization solution. VMWare offers a free license for vSphere Hypervisor that allows users unlimited use of the basic virtualization features but limits some of the nicer features of VMware like hot-adding hardware to virtual systems, VMotion, and managing virtual machines via a command line interface. The current test setup is using a free license associated with my own account, but a shared vmware account for npg admins will probably be needed in the future to allow each of us access to manage our licensees ant to use the web interface at go.vmware.com, which offers some useful tools for managing VMware infrastructure.

Current Status

Pepper is currently acting as our test bed for vSphere Hypervisor. Tomato may become a second test bed if it is needed.

I have tested a process by which machines can be moved from VMware Server to ESXi successfully using VMWare converter. This process will be detailed on this page in the near future.

Installation and Initial Configuration

Installing vSphere Hypervisor is a fairly straightforward process.

First, you need download the ISO image of the vSphere Hypervisor installer from the VMware downloads website. You need a free VMware account to access these downloads. Burn the ISO to a disk and boot the system from it.

The actual VMWare installer is very straightforward. It will ask you to accept the license agreement, and whether you want to install or upgrade. After that you select a disk onto which you want to install the hypervisor, and the installer will do the rest of the work.

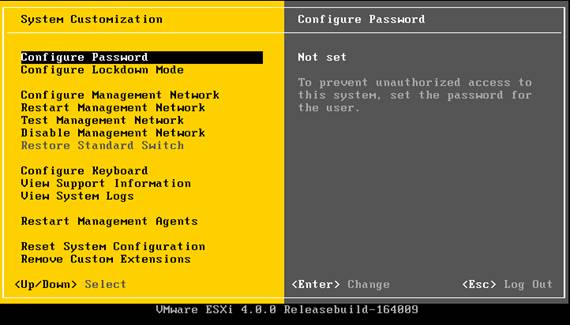

Once the install is finished you're presented with a simple text-based interface called the Direct Console User Interface (dcui). here's what it looks like:

For a more detailed walkthrough of the post-install configuration including more screenshots see this tutorial.

All that you really need to do from this interface is to set the system's root password and configure the Management Network.

ESXi Network Configuration notes

The management network refers to the network connection that you will use to manage the hypervisor remotely. In general this should be a connection to the UNH network, otherwise you will only be able to manage your Virtual Machines from inside the server room. The dcui only allows you to configure the management network interface, but once that is configured you can use the vSphere Client to do more detailed network configuration.

The management interface only accepts one network configuration. This means that if a hypervisor is connected to both the farm and UNH networks you should configure the management network with a UNH network address so that it can be managed from outside of the server room. This does not mean that your virtual machines cannot have multiple network connections, this only applies to the management network configuration that is setup through the machine's direct console interface. The vSphere Client can be used to configure multiple networks (bridged, internal to vmware or otherwise) to use with your virtual machines.

In the case of pepper, which only has one connection to the Farm switch, I needed to set the vlan to 2 in order to allow the management network interface to have a UNH network address. This should apply equally to tomato if it becomes a second hypervisor system.

Problems and Limitations

Limited Hardware Compatibility

vSphere Hypervisor has a very limited hardware compatibility list.

I first encountered this problem when configuring pepper as an ESXi test machine. The RAID card is not supported by ESXi, and the installer did not recognize any RAID volumes. I was only able to install ESXi by circumventing the RAID card and plugging a couple of disks directly into the motherboard. Gourd's RAID card (Areca ARC-1220) is not listed among the models that ESXi supports. They do claim that it supports ARC-1222 model RAID cards using the arcmsr driver version 1.20.0x.15.vmk-90605.3.

This may or may not be a problem for using Gourd as a vSphere hypervisor host. The driver version used by gourd's current Linux system is listed as:

version: Driver Version 1.20.00.15.RH1 2008/02/27 description: ARECA (ARC11xx/12xx/13xx/16xx) SATA/SAS RAID HOST Adapter

Which seems to be the same version of the driver. This could mean that ESXi will work with gourd's Areca card, but we won't know until it can be tested and verified.

Limited management interfaces

Free VMware licenses are limited to managing Virtual Machines only via the vSphere Client (Windows Only). Some tools for managing hypervisors are provided at go.vmware.com, but they require Microsoft Browser plugins that do not work under Linux. Paid licenses grant access to VM management using the vSphere command line and the VIX API for perl, C, Microsoft COM (C# and Visual Basic).

In order to test VMware on an NPG workstation I restored the original Windows Vista install from the Dell restore disk to benfranklin, which now dual-boots Windows and Fedora 14. This means that only one machine is currently available to NPG members to manage systems on vSphere Hypervisors.

No Software RAID 1

vSphere Hypervisor does not offer any software-based RAID 1 support. This means that all hard drive mirroring needs to be done with the hardware RAID card (provided that it is supported by the hypervisor). There is, however, the ability to combine several disks into one large data volume, but this provides not redundancy for Virtual machine data.