Difference between revisions of "Gourd/Einstein Migration Plan"

m |

m |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

This page is for notes on the steps needed in order to fully migrate from the current [[old_einstein|Einstein]] system to the new [[Gourd]] hardware and [[Einstein]] System. | This page is for notes on the steps needed in order to fully migrate from the current [[old_einstein|Einstein]] system to the new [[Gourd]] hardware and [[Einstein]] System. | ||

| + | =System Diagrams= | ||

| + | |||

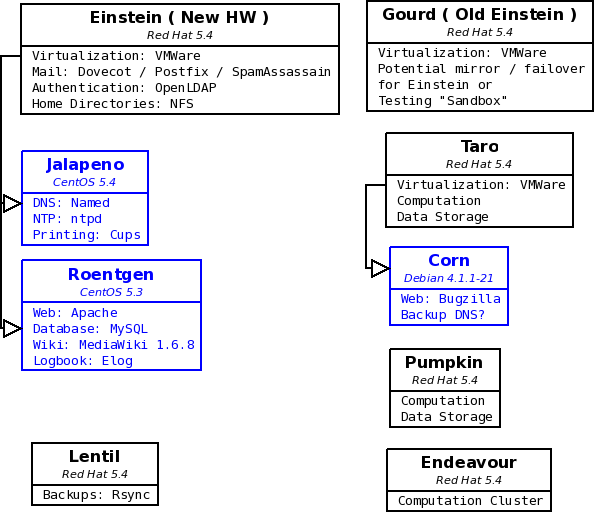

| + | These notes and diagrams describe the migration from the old system layout with the original hardware [[Old_einstein|Einstein]] to the current [[Gourd]] hardware / [[Einstein]] VM design. | ||

| + | |||

| + | == First Proposed Redesign == | ||

| + | |||

| + | This updated diagram incorporates Maurik's suggested changes: | ||

| + | |||

| + | [[Image:proposed_redesign_01.png]] | ||

| + | |||

| + | |||

| + | '''Questions / Concerns''' | ||

| + | |||

| + | This design attempts to minimize the number of virtual machines which frees up a couple of host names. I made Corn a backup DNS server that would run on Taro because this is the best way to failover in case something happens with Jalapeno. Even if Einstein goes down entirely we'll at least have one DNS server still running. | ||

| + | |||

| + | Corn is running Debian and is designed to be a standalone Bugzilla appliance. It is not known whether this will make it difficult to add the DNS functionality ( it should be as simple as installing the service and copying the configuration from Jalapeno ). This needs to be investigated. An alternative is leaving Corn as a standalone and using the Okra virtual machine to provide printing as well as acting as a backup DNS. | ||

| + | |||

| + | It is not known yet whether the old Einstein will be usable as a mirror / failover for the new Einstein. This should be investigated. If it is, we should also investigate whether it will need a Red Hat license, or if CentOS will be sufficient. | ||

| + | |||

| + | == Second Proposed Redesign == | ||

| + | |||

| + | This design is deprecated, but left here for reference purposes. | ||

| + | |||

| + | |||

| + | [[Image:system_layout_future.png]] | ||

=Gourd= | =Gourd= | ||

| Line 17: | Line 42: | ||

#System Setup | #System Setup | ||

##NFS | ##NFS | ||

| − | ##*Set up NFS Shares for /home and /mail '''done - Currently /mail share | + | ##*Set up NFS Shares for /home and /mail '''done - Currently /mail share accessible by Tomato, need to change to Einstein at switchover''' |

| − | ##*Create npghome.unh.edu alias interfaces on Gourd | + | ##*Create npghome.unh.edu alias interfaces on Gourd '''done''' |

##**Add to DNS configs '''done - Assigned farm IP of 10.0.0.240''' | ##**Add to DNS configs '''done - Assigned farm IP of 10.0.0.240''' | ||

| − | ##**Needs to be added to Servers in LDAP for iptables to work | + | ##**Needs to be added to Servers in LDAP for iptables to work '''done on Tomato''' |

| − | ##Change Automount configuration in LDAP (possibly also on clients) to use npghome:/home instead of einstein for /net/home | + | ##Change Automount configuration in LDAP (possibly also on clients) to use npghome:/home instead of einstein for /net/home '''done on tomato''' |

| + | ##*Ran into some trouble with this setup on feynman, could login but apps wouldn't run and Gnome would eventually freeze. Tested several possibilities: | ||

| + | ##**Setting npghome to Einstein's IP address in hosts file worked | ||

| + | ##**Bringing up npghome alias interfaces on Einstein worked | ||

| + | ##**On a hunch tried bringing down the firewall on Gourd, and then I could login and mount /net/home to npghome with no issues. Fixed the firewall configuration (ports were set incorrectly, added eth0.2 as the unh interface instead of eth1, and had the iptables script going to tomato's ldap since it contains the entry for npghome which needed to be added to the firewall, and automount to npghome is now working on feynman, parity, gourd, and tomato without issue as of 01/16 | ||

##Backups | ##Backups | ||

##*Change rsync-backup.conf so that /mail and /home get backed up | ##*Change rsync-backup.conf so that /mail and /home get backed up | ||

| Line 29: | Line 58: | ||

##**Corn '''done''' | ##**Corn '''done''' | ||

##**Roentgen | ##**Roentgen | ||

| + | |||

| + | ==Pre-Migration Setup Notes== | ||

| + | |||

| + | This section contains notes and information from before and during the migration process. It's here mainly for reference purposes. Eventually it should either be moved to another page or removed from this one, once we've finished getting this page updated with the current Gourd setup information. | ||

| + | |||

| + | === Important things to remember before this system takes on the identity of Einstein === | ||

| + | |||

| + | # The ssh fingerprint of the old einstein needs to be imported. | ||

| + | # Obviously, all important data needs to be moved: Home Directories, Mail, DNS records, ... (what else?) | ||

| + | # Fully test functionality before switching! | ||

| + | |||

| + | === Configurations Needed === | ||

| + | |||

| + | # RAIDs need to be setup on Areca card. | ||

| + | #* /home, /var/spool/mail and virtual machines will be stored on software raid due to the [http://faq.areca.com.tw/index.php?option=com_quickfaq&view=items&cid=2:Firmware/BIOS&id=511:Q10050910&Itemid=1 inability to read Area RAID members without an Areca card]. | ||

| + | #* Need to copy the system drive from the passthrough to a Raid1 mirror | ||

| + | # Mail system needs to be setup | ||

| + | # Webmail needs to be setup. Uses Apache? | ||

| + | # DNS needs to be setup. | ||

| + | # Backup needs to be made to work. | ||

| + | #* Backups work. Copied rsync.backup from taro for now, need to change to include homes after the changeover from Einstein. | ||

| + | # rhn_config - I tried this but our subscriptions seem messed up. (Send message to customer support 11/25/09) | ||

| + | # Denyhosts needs to be setup. | ||

| + | #* Appears to be running as of 1/05. Should it be sending e-mail notifications like Einstein/Endeavour? | ||

| + | # NFS needs to be set up. | ||

| + | #* Home folders will be independent of a particular system. Gourd will normally serve the home folders via an [http://www.linuxhomenetworking.com/wiki/index.php/Quick_HOWTO_:_Ch03_:_Linux_Networking#Multiple_IP_Addresses_on_a_Single_NIC aliased network interface]. Automount configuration for each machine will need to change so that /net/home refers to /home on npghome.unh.edu/132.177.91.210. This alias can be configured on a secondary machine if gourd needs to go down, and should be transparent to the user. | ||

| + | #* Need to create a share to provide the mail spool to Einstein. LDAP database is small enough that it may be easier to just store that on the Einstein VM. | ||

| + | |||

| + | === Initialization === | ||

| + | |||

| + | Server arrived 11/24/2009, was unpacked, placed in the rack and booted on 11/25/2009. | ||

| + | |||

| + | Initial configuration steps are logged here: | ||

| + | * Initial host name is gourd (gourd.unh.edu) with eth0 at 10.0.0.252 and eth0.2 (VLAN) at 132.177.88.75 | ||

| + | * The ARECA card is set at 10.0.0.152. The password is changed to the standard ARECA card password, user is still ADMIN. | ||

| + | * The IPMI card was configured using the SuperMicro ipmicfg utility. The net address is set to 10.0.0.151. Access is verified by IPMIView from Taro. The grub.conf and inittab lines are changed so that SOL is possible at 19200 baud. | ||

| + | * The LDAP configuration is copied from Taro. This means it is currently in '''client ldap''' mode, and needs to be change to an '''ldap server''' before production. You can log in as yourself. | ||

| + | * The autofs configuration is copied from Taro. The /net/home and /net/data directories work. | ||

| + | * The sudoers is copied from Taro, but it does not appear to work - REASON: pam.d/system-auth | ||

| + | * Added "auth sufficient pam_ldap.so use_first_pass" to /etc/pam.d/system-auth - now sudo works correctly. | ||

| + | |||

| + | === Proposed RAID Configuration === | ||

| + | |||

| + | Considering that "large storage" is both dangerous and inflexible, and we really don't want to have a large volume for /home or /var/spool/mail, the following configuration may actually be ideal. | ||

| + | We use only RAID1 for the mail storage spaces, so that there is always the option of breaking the RAID and using one of the disks in another server for near instant failover. This needs to be tested for Areca RAID1. We also need to consider that a RAID card has "physical drives", "Volume Set" and "Raid Set". The individual physical drives are grouped into a "Volume Set". This volume set is then partitioned into "Raid Sets", and the Raid Sets are exposed to the operating system as disks. These disks can then (if you insist, as you do for the system) partitioned by the operating system using parted or LVM into partitions, which will hold the filesystems. | ||

| + | |||

| + | We only need 4 of the drive bays to meet our code needs. The other 4 drive bays can hold an additional storage of less critical data, exta VMs, a hot spare, and an empty, which can be filled with a 1 TB drive and configured like a "Time Machine" to automatically backup the /home and VMs to, so that this system no longer depends on Lentil for its core backup. (Just an idea for the future.) | ||

| + | |||

| + | '''Disks and Raid configuration''' | ||

| + | {| style="wikitable;" border="1" | ||

| + | ! Drive Bay !! Disk Size !! Raid Set !! Raid level | ||

| + | |- | ||

| + | | 1 || 250 GB ||rowspan="2"| Set 1 ||rowspan="2"| Raid1 | ||

| + | |- | ||

| + | | 2 || 250 GB | ||

| + | |- | ||

| + | | 3 || 750 GB ||rowspan="2"| Set 2 ||rowspan="2"| Raid1 | ||

| + | |- | ||

| + | | 4 || 750 GB | ||

| + | |- | ||

| + | | 5 || 750 GB || rowspan="2"| Set 3 ||rowspan="2"| Raid1 | ||

| + | |- | ||

| + | | 6 || 750 GB | ||

| + | |- | ||

| + | | 7 || 750 GB || Hot Swap || None | ||

| + | |- | ||

| + | | 8 || None || empty || None | ||

| + | |} | ||

| + | <br/> | ||

| + | |||

| + | '''Volume Set and Partition configuration''' | ||

| + | {| style="wikitable;" border="1" | ||

| + | ! Raid set!! Volume set !! Volume size !! Partitions | ||

| + | |- | ||

| + | | Set 1 || Volume 1 || 250 GB || System: (/, /boot, /var, /tmp, /swap, /usr, /data ) | ||

| + | |- | ||

| + | |rowspan="3"| Set 2 || Volume 2 || 500 GB || Home Dirs: /home | ||

| + | |- | ||

| + | | Volume 3 || 100 GB || Var: Mail and LDAP | ||

| + | |- | ||

| + | | Volume 4 || 150 GB || Virtual Machines: Einstein/Roentgen/Corn | ||

| + | |- | ||

| + | |rowspan="2"| Set 3 || Volume 5 || 250 GB || Additional VM space | ||

| + | |- | ||

| + | | Volume 6 || 500 GB || Additional Data Space | ||

| + | |} | ||

| + | |||

| + | |||

| + | ====RAID Configuration Notes==== | ||

| + | |||

| + | #Copied the 250GB system drive pass-thru disk to a 250GB RAID 1 volume on two 750GB disks (Slots 1 and 2) | ||

| + | #*Something may have been wrong with the initial copy of the system. It booted from the RAID a couple of times but didn't come back up on reboot this weekend. I am attempting the copy again from the original unmodified system drive using ddrescue -- Adam 1/11/10 | ||

| + | #*Discovered that the problem wasn't the copy, it was that the BIOS allows you to choose the drive to boot from by the SCSI ID/LUN, and had somehow gotten set to boot from 0/1 (/dev/sdb - the empty scratch partition) instead of 0/0 which had the system on it. I can still boot the original system drive without issue. -- Adam 1/12/10 | ||

| + | #*Remaining 500GB on each drive spanned to a 1TB RAID 0, mounted on /Scratch | ||

| + | #Two 750GB disks as pass-thru, set up as software RAID (Slots 3 and 4) | ||

| + | #*500GB RAID 1 for home folders (/dev/md0) currently mounted in /mnt/newhome temporarily, needs to be moved to /home during migration | ||

| + | #*100GB RAID 1 for mail (/dev/md1) mounted in /Mail | ||

| + | #*150GB RAID 1 for virtual machines (/dev/md2) mounted in /VMWare and added as a local datastore in VMWare | ||

| + | #Currently two 750GB drives in Slots 5 and 6 for testing | ||

| + | #Two 750GB drives in Slots 7 and 8 as hot spares | ||

| + | |||

| + | |||

| + | |||

| + | =Einstein VM ( Currently Tomato )= | ||

| + | |||

| + | #VM Setup '''done''' | ||

| + | ##Create the Virtual Machine, Install / setup OS '''done''' | ||

| + | ##*Tomato is currently a CentOS 5.4 machine running on gourd | ||

| + | ##**Ran into issues with rhn since we don't seem to have a spare license to register tomato. We used CentOS so that we could install and update necessary packages and test out the new configuration, but we can set up Tomato with Einstein's license once that is free if needed, and then copy configs over from the current machine. | ||

| + | ##*Tomato virtual machine is setup to boot when Gourd boots. Tested this setup and gourd comes up successfully after a reboot. Initial login on gourd is a bit sluggish as you have to wait for tomato to finish booting, but works fine after a few seconds. | ||

| + | #LDAP Configuration '''done''' | ||

| + | ##Copied LDAP configuration from Einstein. Have tested authentication with tomato's LDAP on feynman, gluon, parity, gourd, and tomato itself. Seems to work as well as Einstein. | ||

| + | #Firewall setup '''done''' | ||

| + | #*used old Einstein's iptables-npg config. Probably need to clean up some of the old unused rules from the old machine, though. | ||

| + | #Mail Setup | ||

| + | ##Copy over configs for Dovecot, Postfix, Spamassassin, Mailman and Squirrelmail '''done''' | ||

| + | ##*Set up mail services using Einstein's current setup. Copied over CMUSieve plugin from Einstein. | ||

| + | ##*Dovecot seems to have some issues accessing mail over NFS mounts. Initially received the following error in /var/log/maillog - ''"dovecot: Mailbox indexes in /var/spool/mail/aduston are in NFS mount. You must set mmap_disable=yes to avoid index corruptions. If you're sure this check was wrong, set nfs_check=no"'' Changed the mmap_disable setting in /etc/dovecot.conf to yes. Considering making other changes according to the [http://wiki.dovecot.org/NFS Dovecot wiki article on NFS]. Will test to make sure they don't break anything. | ||

| + | ##*Mailman still needs setup | ||

| + | ##*Need to setup Apache for Squirrelmail. Also /var/www from old einstein for automount. Should websites from Einstein run on the new VM, or move to Roentgen? '''done - websites will be left alone for now but should probably stop being served from einstein''' | ||

| + | ##**Squirrelmail works, had some trouble caused by incorrect permissions on config files, now fixed. | ||

| + | ##**Copied /var/www/html from Einstein. Need to add entry in export for automount | ||

| + | |||

| + | =Day of Migration Checklist= | ||

| + | |||

| + | #Need to send out e-mails reminding users of necessary steps on their end to prepare for the migration on Sunday. '''Sent initial e-mail, currently drafting the reminder before Friday night''' | ||

| + | #Prepare Tomato to take over for Einstein | ||

| + | #*We should do an initial rsync of mail and homes on Friday night or Saturday so that the day-of sync will take less time. | ||

| + | #*Tomato can be reconfigured to prepare for the switchover ahead of time. We will need to login via the VMWare interface and shut down the network interfaces to avoid conflicts with the current Einstein and then make configuration changes so that it identifies itself as Einstein (listed below) | ||

| + | #*At 1pm Sunday we will bring down mail on the current Einstein and then boot the newly reconfigured Einstein VM. | ||

| + | #**Test e-mail, webmail, ldap etc - login from several workstations to make sure things are authenticating correctly. | ||

| + | #If this is successful we can begin the process of switching automount to use npghome on workstations. | ||

| + | #*This may not require a reboot. I tested it on feynman and all I had to do was make the changes to auto.net and then restart autofs while only logged in as root. We can try it this way but be prepared to reboot if there's some issue with nfs. | ||

| + | |||

| + | ==List of configuration files where "tomato" needs to be changed back to "einstein"== | ||

| + | ===Tomato=== | ||

| + | /etc/sysconfig/network | ||

| + | '''change IP Addresses in network-scripts''' | ||

| + | |||

| + | /etc/openldap/slapd.conf '''done''' | ||

| + | |||

| + | /etc/httpd/conf/httpd.conf '''done''' | ||

| + | /etc/httpd/conf.d/mailman.conf '''done''' | ||

| + | |||

| + | /etc/dovecot.conf '''done''' | ||

| + | /etc/postfix/main.cf '''done''' | ||

| + | |||

| + | /etc/mailman/mm_cfg.py '''done''' | ||

| + | |||

| + | ===Gourd=== | ||

| + | |||

| + | /usr/local/bin/netgroup2iptables.pl -- set to authenticate to tomato. change back to einstein. | ||

| + | /etc/exports -- Change /mail share so that it allows connections to Einstein's IP addresses, not tomato's | ||

| + | |||

| + | |||

| + | ===Other Machines=== | ||

| + | |||

| + | Other workstations and servers will have to have their configurations for /etc/auto.net changed so that home is mounted via npghome and not einstein. Other configs can be left the same apart from those whose ldap configurations were temporarily switched to use tomato for testing - Probably just Gourd, Feynman, Gluon and possibly parity. '''done for clients''' | ||

Latest revision as of 19:06, 16 June 2010

This page is for notes on the steps needed in order to fully migrate from the current Einstein system to the new Gourd hardware and Einstein System.

System Diagrams

These notes and diagrams describe the migration from the old system layout with the original hardware Einstein to the current Gourd hardware / Einstein VM design.

First Proposed Redesign

This updated diagram incorporates Maurik's suggested changes:

Questions / Concerns

This design attempts to minimize the number of virtual machines which frees up a couple of host names. I made Corn a backup DNS server that would run on Taro because this is the best way to failover in case something happens with Jalapeno. Even if Einstein goes down entirely we'll at least have one DNS server still running.

Corn is running Debian and is designed to be a standalone Bugzilla appliance. It is not known whether this will make it difficult to add the DNS functionality ( it should be as simple as installing the service and copying the configuration from Jalapeno ). This needs to be investigated. An alternative is leaving Corn as a standalone and using the Okra virtual machine to provide printing as well as acting as a backup DNS.

It is not known yet whether the old Einstein will be usable as a mirror / failover for the new Einstein. This should be investigated. If it is, we should also investigate whether it will need a Red Hat license, or if CentOS will be sufficient.

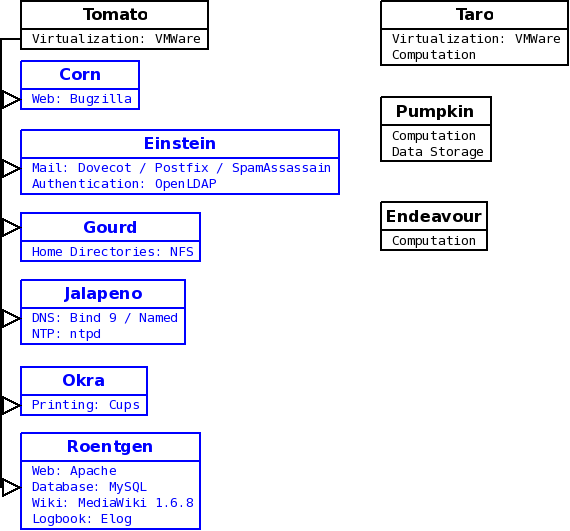

Second Proposed Redesign

This design is deprecated, but left here for reference purposes.

Gourd

Gourd will serve as the file server for home folders and mail, as well as the Virtualization host for Einstein and other Virtual Machines such as Roentgen and Corn

Migration Checklist for Gourd

- Drives and RAID

- Configure hard drives and RAID arrays as outlined here

- Copy the 250GB system drive pass-thru disk to a 250GB RAID 1 volume on two 750GB disks (Slots 1 and 2) done

- Remaining 500GB on each drive spanned to a 1TB RAID 0, mounted on /scratch done

- Two 750GB disks as pass-thru, set up as software RAID (Slots 3 and 4) done

- 500GB RAID 1 for home folders (/dev/md0) mounted on /home done

- 100GB RAID 1 for Einstein's /var/spool/mail (/dev/md1) mounted on /mail done

- 150GB RAID 1 for virtual machines (/dev/md2) mounted on /vmware and added as a local datastore in VMWare done

- Two 750GB drives in Slots 7 and 8 as hot spares done

- Configure hard drives and RAID arrays as outlined here

- System Setup

- NFS

- Set up NFS Shares for /home and /mail done - Currently /mail share accessible by Tomato, need to change to Einstein at switchover

- Create npghome.unh.edu alias interfaces on Gourd done

- Add to DNS configs done - Assigned farm IP of 10.0.0.240

- Needs to be added to Servers in LDAP for iptables to work done on Tomato

- Change Automount configuration in LDAP (possibly also on clients) to use npghome:/home instead of einstein for /net/home done on tomato

- Ran into some trouble with this setup on feynman, could login but apps wouldn't run and Gnome would eventually freeze. Tested several possibilities:

- Setting npghome to Einstein's IP address in hosts file worked

- Bringing up npghome alias interfaces on Einstein worked

- On a hunch tried bringing down the firewall on Gourd, and then I could login and mount /net/home to npghome with no issues. Fixed the firewall configuration (ports were set incorrectly, added eth0.2 as the unh interface instead of eth1, and had the iptables script going to tomato's ldap since it contains the entry for npghome which needed to be added to the firewall, and automount to npghome is now working on feynman, parity, gourd, and tomato without issue as of 01/16

- Ran into some trouble with this setup on feynman, could login but apps wouldn't run and Gnome would eventually freeze. Tested several possibilities:

- Backups

- Change rsync-backup.conf so that /mail and /home get backed up

- Create new LDAP group for backups so that gourd doesn't get backed up a second time as npghome - change backup script in Lentil to use new group

- Virtual Machines

- Copy virtual machines from Taro to /vmware on gourd

- Corn done

- Roentgen

- Copy virtual machines from Taro to /vmware on gourd

- NFS

Pre-Migration Setup Notes

This section contains notes and information from before and during the migration process. It's here mainly for reference purposes. Eventually it should either be moved to another page or removed from this one, once we've finished getting this page updated with the current Gourd setup information.

Important things to remember before this system takes on the identity of Einstein

- The ssh fingerprint of the old einstein needs to be imported.

- Obviously, all important data needs to be moved: Home Directories, Mail, DNS records, ... (what else?)

- Fully test functionality before switching!

Configurations Needed

- RAIDs need to be setup on Areca card.

- /home, /var/spool/mail and virtual machines will be stored on software raid due to the inability to read Area RAID members without an Areca card.

- Need to copy the system drive from the passthrough to a Raid1 mirror

- Mail system needs to be setup

- Webmail needs to be setup. Uses Apache?

- DNS needs to be setup.

- Backup needs to be made to work.

- Backups work. Copied rsync.backup from taro for now, need to change to include homes after the changeover from Einstein.

- rhn_config - I tried this but our subscriptions seem messed up. (Send message to customer support 11/25/09)

- Denyhosts needs to be setup.

- Appears to be running as of 1/05. Should it be sending e-mail notifications like Einstein/Endeavour?

- NFS needs to be set up.

- Home folders will be independent of a particular system. Gourd will normally serve the home folders via an aliased network interface. Automount configuration for each machine will need to change so that /net/home refers to /home on npghome.unh.edu/132.177.91.210. This alias can be configured on a secondary machine if gourd needs to go down, and should be transparent to the user.

- Need to create a share to provide the mail spool to Einstein. LDAP database is small enough that it may be easier to just store that on the Einstein VM.

Initialization

Server arrived 11/24/2009, was unpacked, placed in the rack and booted on 11/25/2009.

Initial configuration steps are logged here:

- Initial host name is gourd (gourd.unh.edu) with eth0 at 10.0.0.252 and eth0.2 (VLAN) at 132.177.88.75

- The ARECA card is set at 10.0.0.152. The password is changed to the standard ARECA card password, user is still ADMIN.

- The IPMI card was configured using the SuperMicro ipmicfg utility. The net address is set to 10.0.0.151. Access is verified by IPMIView from Taro. The grub.conf and inittab lines are changed so that SOL is possible at 19200 baud.

- The LDAP configuration is copied from Taro. This means it is currently in client ldap mode, and needs to be change to an ldap server before production. You can log in as yourself.

- The autofs configuration is copied from Taro. The /net/home and /net/data directories work.

- The sudoers is copied from Taro, but it does not appear to work - REASON: pam.d/system-auth

- Added "auth sufficient pam_ldap.so use_first_pass" to /etc/pam.d/system-auth - now sudo works correctly.

Proposed RAID Configuration

Considering that "large storage" is both dangerous and inflexible, and we really don't want to have a large volume for /home or /var/spool/mail, the following configuration may actually be ideal. We use only RAID1 for the mail storage spaces, so that there is always the option of breaking the RAID and using one of the disks in another server for near instant failover. This needs to be tested for Areca RAID1. We also need to consider that a RAID card has "physical drives", "Volume Set" and "Raid Set". The individual physical drives are grouped into a "Volume Set". This volume set is then partitioned into "Raid Sets", and the Raid Sets are exposed to the operating system as disks. These disks can then (if you insist, as you do for the system) partitioned by the operating system using parted or LVM into partitions, which will hold the filesystems.

We only need 4 of the drive bays to meet our code needs. The other 4 drive bays can hold an additional storage of less critical data, exta VMs, a hot spare, and an empty, which can be filled with a 1 TB drive and configured like a "Time Machine" to automatically backup the /home and VMs to, so that this system no longer depends on Lentil for its core backup. (Just an idea for the future.)

Disks and Raid configuration

| Drive Bay | Disk Size | Raid Set | Raid level |

|---|---|---|---|

| 1 | 250 GB | Set 1 | Raid1 |

| 2 | 250 GB | ||

| 3 | 750 GB | Set 2 | Raid1 |

| 4 | 750 GB | ||

| 5 | 750 GB | Set 3 | Raid1 |

| 6 | 750 GB | ||

| 7 | 750 GB | Hot Swap | None |

| 8 | None | empty | None |

Volume Set and Partition configuration

| Raid set | Volume set | Volume size | Partitions |

|---|---|---|---|

| Set 1 | Volume 1 | 250 GB | System: (/, /boot, /var, /tmp, /swap, /usr, /data ) |

| Set 2 | Volume 2 | 500 GB | Home Dirs: /home |

| Volume 3 | 100 GB | Var: Mail and LDAP | |

| Volume 4 | 150 GB | Virtual Machines: Einstein/Roentgen/Corn | |

| Set 3 | Volume 5 | 250 GB | Additional VM space |

| Volume 6 | 500 GB | Additional Data Space |

RAID Configuration Notes

- Copied the 250GB system drive pass-thru disk to a 250GB RAID 1 volume on two 750GB disks (Slots 1 and 2)

- Something may have been wrong with the initial copy of the system. It booted from the RAID a couple of times but didn't come back up on reboot this weekend. I am attempting the copy again from the original unmodified system drive using ddrescue -- Adam 1/11/10

- Discovered that the problem wasn't the copy, it was that the BIOS allows you to choose the drive to boot from by the SCSI ID/LUN, and had somehow gotten set to boot from 0/1 (/dev/sdb - the empty scratch partition) instead of 0/0 which had the system on it. I can still boot the original system drive without issue. -- Adam 1/12/10

- Remaining 500GB on each drive spanned to a 1TB RAID 0, mounted on /Scratch

- Two 750GB disks as pass-thru, set up as software RAID (Slots 3 and 4)

- 500GB RAID 1 for home folders (/dev/md0) currently mounted in /mnt/newhome temporarily, needs to be moved to /home during migration

- 100GB RAID 1 for mail (/dev/md1) mounted in /Mail

- 150GB RAID 1 for virtual machines (/dev/md2) mounted in /VMWare and added as a local datastore in VMWare

- Currently two 750GB drives in Slots 5 and 6 for testing

- Two 750GB drives in Slots 7 and 8 as hot spares

Einstein VM ( Currently Tomato )

- VM Setup done

- Create the Virtual Machine, Install / setup OS done

- Tomato is currently a CentOS 5.4 machine running on gourd

- Ran into issues with rhn since we don't seem to have a spare license to register tomato. We used CentOS so that we could install and update necessary packages and test out the new configuration, but we can set up Tomato with Einstein's license once that is free if needed, and then copy configs over from the current machine.

- Tomato virtual machine is setup to boot when Gourd boots. Tested this setup and gourd comes up successfully after a reboot. Initial login on gourd is a bit sluggish as you have to wait for tomato to finish booting, but works fine after a few seconds.

- Tomato is currently a CentOS 5.4 machine running on gourd

- Create the Virtual Machine, Install / setup OS done

- LDAP Configuration done

- Copied LDAP configuration from Einstein. Have tested authentication with tomato's LDAP on feynman, gluon, parity, gourd, and tomato itself. Seems to work as well as Einstein.

- Firewall setup done

- used old Einstein's iptables-npg config. Probably need to clean up some of the old unused rules from the old machine, though.

- Mail Setup

- Copy over configs for Dovecot, Postfix, Spamassassin, Mailman and Squirrelmail done

- Set up mail services using Einstein's current setup. Copied over CMUSieve plugin from Einstein.

- Dovecot seems to have some issues accessing mail over NFS mounts. Initially received the following error in /var/log/maillog - "dovecot: Mailbox indexes in /var/spool/mail/aduston are in NFS mount. You must set mmap_disable=yes to avoid index corruptions. If you're sure this check was wrong, set nfs_check=no" Changed the mmap_disable setting in /etc/dovecot.conf to yes. Considering making other changes according to the Dovecot wiki article on NFS. Will test to make sure they don't break anything.

- Mailman still needs setup

- Need to setup Apache for Squirrelmail. Also /var/www from old einstein for automount. Should websites from Einstein run on the new VM, or move to Roentgen? done - websites will be left alone for now but should probably stop being served from einstein

- Squirrelmail works, had some trouble caused by incorrect permissions on config files, now fixed.

- Copied /var/www/html from Einstein. Need to add entry in export for automount

- Copy over configs for Dovecot, Postfix, Spamassassin, Mailman and Squirrelmail done

Day of Migration Checklist

- Need to send out e-mails reminding users of necessary steps on their end to prepare for the migration on Sunday. Sent initial e-mail, currently drafting the reminder before Friday night

- Prepare Tomato to take over for Einstein

- We should do an initial rsync of mail and homes on Friday night or Saturday so that the day-of sync will take less time.

- Tomato can be reconfigured to prepare for the switchover ahead of time. We will need to login via the VMWare interface and shut down the network interfaces to avoid conflicts with the current Einstein and then make configuration changes so that it identifies itself as Einstein (listed below)

- At 1pm Sunday we will bring down mail on the current Einstein and then boot the newly reconfigured Einstein VM.

- Test e-mail, webmail, ldap etc - login from several workstations to make sure things are authenticating correctly.

- If this is successful we can begin the process of switching automount to use npghome on workstations.

- This may not require a reboot. I tested it on feynman and all I had to do was make the changes to auto.net and then restart autofs while only logged in as root. We can try it this way but be prepared to reboot if there's some issue with nfs.

List of configuration files where "tomato" needs to be changed back to "einstein"

Tomato

/etc/sysconfig/network change IP Addresses in network-scripts

/etc/openldap/slapd.conf done

/etc/httpd/conf/httpd.conf done /etc/httpd/conf.d/mailman.conf done

/etc/dovecot.conf done /etc/postfix/main.cf done

/etc/mailman/mm_cfg.py done

Gourd

/usr/local/bin/netgroup2iptables.pl -- set to authenticate to tomato. change back to einstein. /etc/exports -- Change /mail share so that it allows connections to Einstein's IP addresses, not tomato's

Other Machines

Other workstations and servers will have to have their configurations for /etc/auto.net changed so that home is mounted via npghome and not einstein. Other configs can be left the same apart from those whose ldap configurations were temporarily switched to use tomato for testing - Probably just Gourd, Feynman, Gluon and possibly parity. done for clients